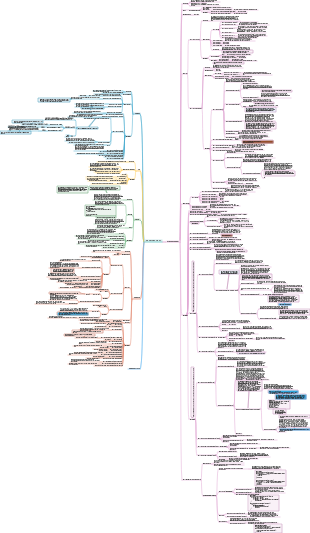

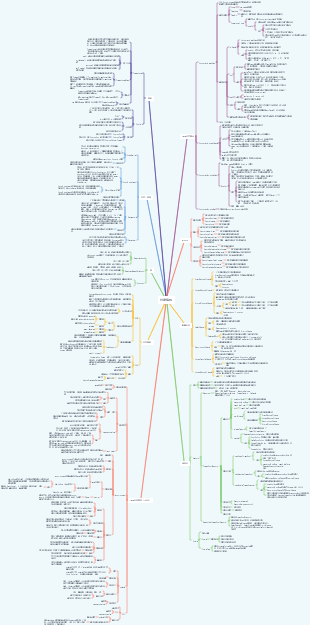

MindMap Gallery Java concurrency system knowledge map (concurrent programming) mind map

- 109

Java concurrency system knowledge map (concurrent programming) mind map

This is a mind map about Java concurrency system knowledge map (concurrent programming), including blocking queue, concurrency basics, locks, JAVA memory model and other aspects of knowledge.

Edited at 2023-11-06 19:35:07- bacteria

This is a mind map about bacteria, and its main contents include: overview, morphology, types, structure, reproduction, distribution, application, and expansion. The summary is comprehensive and meticulous, suitable as review materials.

- Plant asexual reproduction

This is a mind map about plant asexual reproduction, and its main contents include: concept, spore reproduction, vegetative reproduction, tissue culture, and buds. The summary is comprehensive and meticulous, suitable as review materials.

- Reproductive development of animals

This is a mind map about the reproductive development of animals, and its main contents include: insects, frogs, birds, sexual reproduction, and asexual reproduction. The summary is comprehensive and meticulous, suitable as review materials.

Java concurrency system knowledge map (concurrent programming) mind map

- bacteria

This is a mind map about bacteria, and its main contents include: overview, morphology, types, structure, reproduction, distribution, application, and expansion. The summary is comprehensive and meticulous, suitable as review materials.

- Plant asexual reproduction

This is a mind map about plant asexual reproduction, and its main contents include: concept, spore reproduction, vegetative reproduction, tissue culture, and buds. The summary is comprehensive and meticulous, suitable as review materials.

- Reproductive development of animals

This is a mind map about the reproductive development of animals, and its main contents include: insects, frogs, birds, sexual reproduction, and asexual reproduction. The summary is comprehensive and meticulous, suitable as review materials.

- Recommended to you

- Outline

Concurrent programming

Java Memory Model (JMM)

Thread communication mechanism

memory sharing

Java Adoption

messaging

memory model

Reorder

For the performance of the program, the processor and compiler will reorder the program.

condition

The results of program execution cannot be changed in a single-threaded environment.

Reordering is not allowed if data dependencies exist

question

Reordering may result in unsafe data in a multi-threaded environment

sequential consistency

Theoretical reference model in multi-threaded environment

Provides strong memory visibility guarantees for programs

characteristic

All operations in a thread must be performed in the order of the program

All threads can only see a single order of operation execution, regardless of whether the program is synchronized or not

Each operation must be performed atomically and immediately visible to all threads

happens-before

The core theory in JMM ensures memory visibility

In JMM, if the results of one operation need to be visible to another operation, then there must be a happens-before relationship between the two operations.

theory

If one operation happens-before another operation, then the execution results of the first operation will be visible to the second operation, and the execution order of the first operation will be before the second operation.

The existence of a happens-before relationship between two operations does not mean that they must be executed in the order specified by the happens-before principle. If the execution result after reordering is consistent with the execution result according to the happens-before relationship, then this reordering is not illegal.

as-if-serial

All operations can be reordered for optimization, but you must ensure that the results of the reordering cannot be changed.

synchronized

Synchronization, heavyweight lock

principle

synchronized can ensure that when a method or code block is running, only one method can enter the critical section at the same time. It can also ensure the memory visibility of shared variables.

lock object

Ordinary synchronization method, the lock is the current instance object

Static synchronization method, the lock is the class object of the current class

Synchronized method block, the lock is the object inside the brackets

Implementation Mechanism

Java object header

The synchronized lock is stored in the Java object header.

Includes two parts of data

Mark Word (mark field)

Mark Word is designed as a non-fixed data structure to store as much data as possible in a very small space. It will reuse its own storage space according to the state of the object.

include

Hash code (HashCode), GC generation age, lock status flag, lock held by thread, biased thread ID, biased timestamp

Klass Pointer (type pointer)

monitor

Owner

Initially NULL means that no thread currently owns the monitor record. When the thread successfully owns the lock, the thread's unique identifier is saved. When the lock is released, it is set to NULL.

Lock optimization

spin lock

The thread waits for a period of time and will not be suspended immediately to see whether the thread holding the lock will release the lock soon (cyclic method)

The number of spin words is difficult to control (-XX:preBlockSpin)

Existential theory: Threads frequently suspend and wake up with a heavy burden. It can be considered that each thread holds the lock for a short time, and the gain outweighs the gain after the thread is suspended and then woken up.

shortcoming

The number of spins cannot be determined

adaptive spin lock

The number of spins is no longer fixed. It is determined by the previous spin time on the same lock and the status of the lock owner.

If the spin is successful, the number of spins can be increased. If acquisition of the lock fails frequently, the number of spins will be reduced.

lock elimination

If there is no data competition, the JVM will eliminate the lock mechanism

Judgments based

Variable escape

lock roughening

Connect multiple consecutive locking and unlocking operations together to expand into a larger lock. For example, acquiring a lock inside a for loop

lightweight lock

Reduce the performance consumption caused by traditional heavyweight locks using operating system mutexes without multi-thread competition.

Acquire and release locks through CAS

performance basis

For most locks, there will be no competition during the entire life cycle.

shortcoming

In a multi-threaded environment, its operating efficiency is slower than that of heavyweight locks.

bias lock

In order to minimize unnecessary lightweight lock execution paths without multi-thread competition

Mainly avoid unnecessary CAS operations as much as possible. If the competition lock fails, upgrade to a lightweight lock.

volatile

characteristic

Volatile visibility: When reading a volatile, you can always see the final write to this variable.

volatile atomicity: volatile is atomic for a single read/write (32-bit Long, Double), except for compound operations, such as i;

Implementation Mechanism

memory barrier

memory semantics

When writing a volatile variable, JMM will immediately refresh the shared variable value in the local memory corresponding to the thread to the main memory.

When reading a volatile variable, JMM will set the local memory corresponding to the thread to invalid and read the shared variable directly from the main memory.

operating system semantics

Main memory, cache (thread private) cache consistent?

solution

By adding LOCK# to the bus

Via cache coherence protocol (MESI protocol)

memory model

Reorder

happens-before

DCL

Singleton pattern

DCL

Reorder

happens-beofre

solution

volatile solution

Disable reordering

Solution based on class initialization

Use the classloder mechanism to ensure that there is only one thread when initializing the instance. The JVM will acquire a lock during the class initialization phase. This lock can synchronize the initialization of the same class by multiple threads.

Concurrency basics

AQS

AbstractQueuedSynchronizer, synchronizer, implements the core basic components of JUC

Solved a large number of detailed issues involved in implementing synchronizers in subclasses, such as obtaining synchronization status and FIFO synchronization queue.

Using the template method pattern, AQS implements a large number of common methods, and subclasses implement their abstract methods through inheritance to manage synchronization status.

CLH sync queue

FIFO bidirectional queue, AQS relies on it to solve the management problem of synchronization state

The first node wakes up and waits for the queue to be added to the end of the CLH synchronization queue.

Synchronous state acquisition and release

Exclusive

Get lock

Get synchronization status: acquire

AcquireInterruptibly: acquireInterruptibly

Timeout acquisition: tryAcquireNanos

release lock

release

shared

Get lock

acquireShared

release lock

releaseShared

Thread blocking and waking up

When a thread acquires the lock, other threads need to block when acquiring it again. When the thread releases the lock, AQS is responsible for waking up the thread.

LockSupport

Is the basic thread blocking primitive used to create locks and other synchronization classes

Each thread using LockSupport is associated with a permission. If the permission is available and can be used in the process, calling park() will return immediately, otherwise it may block. If the license is not yet available, you can call unpark to make it available

park(), unpark()

CAS

Compare And Swap, the core and most basic theory of the entire JUC system

Memory value V, old expected value A, and value to be updated B. If and only if the value of memory value V is equal to the old expected value A, the value of memory value V will be modified to B, otherwise nothing will be done.

There are four parameters in native

defect

Cycle time too long

Only one shared variable can be guaranteed to be atomically operated

ABA problem

solution

version number

AtomicStampedReference

Lock

ReentrantLock

Reentrant lock is a recursive non-blocking synchronization mechanism

A more powerful and flexible locking mechanism than synchronized, which can reduce the probability of deadlock.

Divided into fair lock and unfair lock

The bottom layer is implemented using AQS and inherits AQS through internal Sync.

ReentrantReadWriteLock

Read-write lock, two locks: shared lock: read lock, exclusive lock: write lock

Supports fairness, unfairness, reentrancy and lock degradation

Lock downgrade: According to the order of acquiring write lock, acquiring read lock and releasing write lock, write lock can be downgraded to read lock.

Condition

Lock provides Condition, which is more detailed and flexible for thread waiting and waking up operations.

A Condition queue is maintained internally. When the current thread calls the await() method, a node (Node) will be constructed from the current thread, and the node will be added to the end of the queue.

Concurrency tools

CyclicBarrier

It allows a group of threads to wait for each other until a common barrier point is reached

In layman's terms: let a group of threads be blocked when they reach a barrier. The barrier will not open until the last thread reaches the barrier, and all threads intercepted by the barrier will continue to work.

The bottom layer is implemented using ReentrantLock Condition

Application scenarios

The operation of merging multi-thread results is used to calculate data in multiple threads and finally merge the calculation results.

CountDownLatch

It allows one or more threads to wait until a set of operations being performed in other threads is completed.

Initializes CountDownLatch with the given count. Because the countDown() method is called, the await method blocks until the current count reaches zero. Afterwards, all waiting threads are released and all subsequent calls to await return immediately. This behavior only occurs once - the count cannot be reset. If you need to reset the count, consider using a CyclicBarrier.

Difference from CyclicBarrier

The function of CountDownLatch is to allow 1 or N threads to wait for other threads to complete execution; while CyclicBarrier allows N threads to wait for each other.

CountDownLatch's counter cannot be reset; CyclicBarrier's counter can be reset and used, so it is called a cyclic barrier.

Internally implemented using shared locks

Semaphore

signal

A counter that controls access to multiple shared resources

Conceptually, a semaphore maintains a permission set. If necessary, each acquire() blocks until the permission is available, and then acquires the permission. Each release() adds a permission, potentially releasing a blocking getter. However, instead of using the actual license object, Semaphore just counts the number of available licenses and takes action accordingly

The semaphore Semaphore is a non-negative integer (>=1). When a thread wants to access a shared resource, it must first obtain the Semaphore. When Semaphore > 0, obtain the resource and set Semaphore - 1. If the Semaphore value = 0, it means that all shared resources have been occupied by other threads, and the thread must wait for other threads to release the resources. When the thread releases the resource, the Semaphore is 1

Application scenarios

Often used to limit the number of threads that can access certain resources (physical or logical)

Internally implemented using shared locks

Exchanger

A synchronization point for threads that can pair and swap elements in a pair

Allows data to be exchanged between concurrent tasks. Specifically, the Exchanger class allows synchronization points to be defined between two threads. When both threads reach the synchronization point, they exchange data structures, so the first thread's data structure goes into the second thread, and the second thread's data structure goes into the first thread

other

ThreadLocal

A solution to the problem of member variables in a multi-threaded environment, but has nothing to do with thread synchronization. The idea is to create a separate copy of the variable for each thread, so that each thread can independently change its own copy of the variable without affecting the corresponding copies of other threads.

ThreadLocal is not used to solve the problem of shared variables, nor does it exist to coordinate thread synchronization, but is a mechanism introduced to facilitate each thread to handle its own state.

four methods

get(): Returns the value in the current thread's copy of this thread-local variable

initialValue(): Returns the "initial value" of the current thread for this thread local variable

remove(): Remove the value of this thread local variable in the current thread

set(T value): Sets the value in the current thread's copy of this thread-local variable to the specified value

ThreadLocalMap

The key to implementing thread isolation mechanism

Each Thread has a member variable of type ThreadLocal.ThreadLocalMap inside, which is used to store a copy of the actual ThreadLocal variable.

Provides a method to store a copy of each thread's variables using key-value pairs. The key is the current ThreadLocal object, and the value is the variable copy of the corresponding thread.

be careful

The ThreadLocal instance itself does not store a value, it just provides a key to find a copy of the value in the current thread.

It is ThreadLocal contained in Thread, not Thread contained in ThreadLocal

memory leak problem

ThreadLocalMap

key is a weak reference value is a strong reference and cannot be recycled

Explicitly call remove()

Fork/Join

A framework for executing tasks in parallel is a framework that divides large tasks into several small tasks, and finally aggregates the results of each small task to obtain the results of the large task.

main idea

"Partition"

fork decomposes tasks and join collects data

job theft

A thread steals tasks from other queues for execution

The thread that executes the block helps the slow thread execute the task and improves the efficiency of the entire task.

The queue should use a two-way queue

core class

ForkJoinPool

Thread pool for executing tasks

ForkJoinTask

Represents tasks, task abstraction for ForkJoinPool

ForkJoinWorkerThread

Worker thread that performs tasks

Java concurrent collections

ConcurrentHashMap

CAS Synchronized to ensure the safety of concurrent updates, the bottom layer uses an array linked list/red-black tree storage structure

Important inner classes

Node

key-value key-value pair

TreeNode

Red-black tree node

TreeBin

It is equivalent to a red-black tree. Its construction method is actually the process of constructing a red-black tree.

ForwardingNode

Auxiliary node, used for ConcurrentHashMap expansion operation

sizeCtl

Control identifier, used to control table initialization and expansion operations

meaning

A negative number indicates that initialization or expansion operations are in progress.

-1 means initializing

-N indicates that there are N-1 threads undergoing expansion operations

A positive number or 0 indicates that the hash table has not been initialized. This value indicates the size of initialization or next expansion.

Important operations

initTable

ConcurrentHashMap initialization method

Only one thread can participate in the initialization process, other threads must suspend

The constructor does not perform the initialization process. The initialization is actually triggered by the put operation.

step

sizeCtl < 0 means initialization is in progress and the thread is suspended

The thread obtains the initialization qualification (CAS(SIZECTL, sc, -1)) to perform the initialization process

After the initialization step is completed, set sizeCtl = 0.75 * n (the next expansion threshold), indicating the size of the next expansion

put

main idea

Calculate the position of the node inserted in the table based on the hash value. If the position is empty, insert it directly, otherwise insert it into a linked list or tree.

The real situation is more complicated

step

The table is null and the thread enters the initialization step. If other threads are initializing, the thread hangs.

If the current i position inserted is null, it means that this position is inserted for the first time. Just use CAS to insert the node. If the insertion is successful, addCount is called to determine whether expansion is needed. If the insertion fails, continue matching (spin)

If the hash of the node == MOVED (-1), it means that a thread is expanding, and it will enter the expansion process.

In other cases, nodes are inserted according to the linked list or red-black tree structure, but this process requires locking (synchronized)

get

step

table==null;return null

Get from linked list/red-black tree node

Expansion

Multi-thread expansion

step

Construct a nextTable whose size is twice the original size. This step is completed in a single-threaded environment.

Copy the contents of the original table to nextTable. This step allows multi-threaded operations.

The process of converting a linked list into a red-black tree

The number of elements in the linked list reaches the threshold 8, then the linked list is converted into a red-black tree

Red-black tree algorithm

The difference between 1.8 and 1.7

ConcurrentLinkedQueue

An unbounded thread-safe queue based on link nodes, using the FIFO principle to sort elements, and internally implemented using the CAS algorithm

immutability

The next of the last element in the queue is null

The items of all undeleted nodes in the queue cannot be null and can be traversed from the head node.

For the node to be deleted, instead of setting it to null directly, first set its item field to null (the iterator will skip nodes with null item)

Allow head and tail updates to lag. What does it mean? This means that head and tail do not always point to the first element and the last element (explained later)

Invariance and variability of head

Invariance and variability of tail

Subtlety: CAS is used to complete data operations while allowing inconsistency in the queue, and weak consistency is fully demonstrated.

ConcurrentSkipListMap

The third key-value data structure: SkipList (skip list)

SkipList

Balanced binary tree structure

Skip list allows the sorted data to be distributed in a multi-layer linked list, using a 0-1 random number to determine whether a data will climb upward or not, using an algorithm of "exchanging space for time". A forward pointer is added to each node, and some nodes that are impossible to be involved can be ignored when inserting, deleting, and searching, thereby improving efficiency.

characteristic

It is composed of many layers of structure, and levels are randomly generated through a certain probability.

Each level is an ordered linked list. The default is ascending order. It can also be sorted according to the Comparator provided when creating the mapping, depending on the constructor used.

The lowest level (Level 1) linked list contains all elements

If an element appears in the linked list of Level i, then it will also appear in the linked lists below Level i.

Each node contains two pointers, one pointing to the next element in the same linked list and one pointing to the element one level below.

Find, delete, add

ConcurrentSkipListSet

Internally implemented using ConcurrentSkipListMap

atomic

basic type class

Used to update basic types atomically

AtomicBoolean

Atomic update boolean type

AtomicInteger

Atomic update integer

AtomicLong

Atomic update long

array

Update an element in an array atomically

AtomicIntegerArray

Atomic update of elements in an integer array

AtomicLongArray

Atomic update of elements in long integer array

AtomicReferenceArray

Atomic update of elements in a reference type array

reference type

If you want to update multiple variables atomically, you need to use the class provided by this atomic update reference type.

AtomicReference

Atomic update of reference types

AtomicReferenceFieldUpdater

Atomic update of fields in reference types

AtomicMarkableReference

Atomic update of reference types with flag bits

Field class

If we only need a certain field in a certain class, then we need to use atomic update of the field class

AtomicIntegerFieldUpdater

Updater for atomically updating integer fields

AtomicLongFieldUpdater

Updater for atomically updating long fields

AtomicStampedReference

Atomic update of reference type with version number

blocking queue

ArrayBlockingQueue

A FIFO bounded blocking queue implemented as an array

ArrayBlockingQueue is bounded and fixed. The size is confirmed during the constructor. Changes are not supported after confirmation.

"Fairness" is not guaranteed in a multi-threaded environment

accomplish

ReentrantLock

Condition

LinkedBlockingQueue

Link-based, unbounded FIFO blocking queue

PriorityBlockingQueue

Unbounded blocking queue with priority support

By default, elements are sorted in ascending order in natural order. You can sort elements by specifying a Comparator.

binary heap

Classification

max heap

The key value of the parent node is always greater than or equal to the key value of any child node

min heap

The key value of the parent node is always less than or equal to the key value of any child node

The adding operation is constantly "rising up", while the deletion operation is constantly "falling down"

accomplish

ReentrantLock Condition

binary heap

DelayQueue

Unbounded blocking queue that supports delayed acquisition of elements

application

Cache: clear the cached data that has expired in the cache

Task timeout processing

accomplish

ReentrantLock Condition

Priority queue sorted according to Delay time: PriorityQueue

Delayed interface

Used to mark objects that should be executed after a given delay time

This interface requires that implementing classes that implement it must define a compareTo method that provides ordering consistent with the getDelay method of this interface.

SynchronousQueue

A blocking queue with no capacity

application

To exchange work, the producer's thread and the consumer's thread are synchronized to deliver certain information, events or tasks

Difficult to understand, has difficulty with Exchanger

LinkedTransferQueue

Unbounded blocking queue composed of linked list

Equivalent to a superset of ConcurrentLinkedQueue, SynchronousQueue (in fair mode), unbounded LinkedBlockingQueues, etc.

preemptive mode

If it is available, take it directly. If not, it will occupy this position until it is obtained or times out or interrupted.

LinkedBlockingDeque

A two-way blocking queue composed of a linked list

Capacity is optional. You can set the capacity during initialization to prevent excessive expansion. If not set, the default capacity is Integer.MAX_VALUE.

use

"Job Stealing" Pattern

Thread Pool

benefit

Reduce resource consumption

Reduce the cost of thread creation and destruction by reusing created threads

Improve response speed

When a task arrives, the task can be executed immediately without waiting for the thread to be created.

Improve thread manageability

Unified allocation, tuning and monitoring

Executor

Executors

Static factory class provides static factory methods of Executor, ExecutorService, ScheduledExecutorService, ThreadFactory, Callable and other classes

ThreadPoolExecutor

Parameter meaning

corePoolSize

The number of core threads in the thread pool

maximumPoolSize

The maximum number of threads allowed in the thread pool

keepAliveTime

Thread idle time

unit

unit of keepAliveTime

workQueue

A blocking queue used to hold tasks waiting to be executed

blocking queue used

ArrayBlockingQueue

LinkedBlockingQueue

SynchronousQueue

PriorityBlockingQueue

threadFactory

Factory used to set up thread creation

DefaultThreadFactory

handler

RejectedExecutionHandler, thread pool rejection strategy

Classification

AbortPolicy: Throw an exception directly, default policy

CallerRunsPolicy: Use the thread where the caller is located to execute tasks

DiscardOldestPolicy: Discard the frontmost task in the blocking queue and execute the current task

DiscardPolicy: Discard the task directly

Thread pool classification

newFixedThreadPool

Reusable thread pool with a fixed number of threads

analyze

corePoolSize is consistent with maximumPoolSize

Using an "unbounded" queue LinkedBlockingQueue

maximumPoolSize, keepAliveTime, RejectedExecutionHandler invalid

newCachedThreadPool

Executor using a single worker thread

analyze

corePoolSize and maximumPoolSize are set to 1

Use LinkedBlockingQueue as workerQueue

newSingleThreadExecutor

A thread pool that creates new threads as needed

analyze

corePoolSize is set to 0

maximumPoolSize is set to Integer.MAX_VALUE

SynchronousQueue as WorkerQueue

If the main thread submits tasks faster than the threads in the maximumPool process tasks, CachedThreadPool will continue to create new threads, which may exhaust CPU and memory resources.

Task submission

Executor.execute()

ExecutorService.submit()

Task execution

Implementation process

Thread pool tuning

Two models

Thread pool monitoring

ScheduledThreadPoolExecutor

Inherited from ThreadPoolExecutor

Run the task after a given delay, or execute the task periodically

DelayQueue is used internally to implement it, and scheduled tasks will be put into DelayQueue. DelayQueue internally encapsulates PriorityQueue, which sorts the ScheduledFutureTasks in the queue.

Future

Asynchronous calculation

Future

Provide operations

Cancellation of execution tasks

Query whether the task is completed

Get task execution results

FutureTask

Implement the RunnableFuture interface, which can be executed as a Runnable or used as a Future to get the return value of Callable

Internally implemented based on AQS