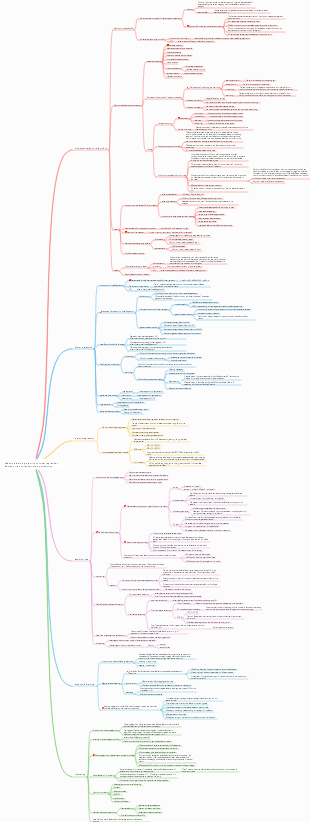

MindMap Gallery Basic regression algorithm for machine learning

- 35

Basic regression algorithm for machine learning

It summarizes the basic regression algorithms in machine learning, such as basic linear regression, recursive regression, regularized linear regression, sparse linear regression Lasso, linear basis function regression, singular value decomposition, error decomposition of regression learning, etc.

Edited at 2023-02-15 23:14:30- Key Timeline of African American History

This infographic, created using EdrawMax, outlines the pivotal moments in African American history from 1619 to the present. It highlights significant events such as emancipation, key civil rights legislation, and notable achievements that have shaped the social and political landscape. The timeline serves as a visual representation of the struggle for equality and justice, emphasizing the resilience and contributions of African Americans throughout history.

- Timeline of the Development of Voting Rights and Citizenship in the United State

This infographic, designed with EdrawMax, presents a detailed timeline of the evolution of voting rights and citizenship in the U.S. from 1870 to the present. It highlights key legislative milestones, court decisions, and societal changes that have expanded or challenged voting access. The timeline underscores the ongoing struggle for equality and the continuous efforts to secure voting rights for all citizens, reflecting the dynamic nature of democracy in America.

- African American Cultural Heritage and Excellence

This infographic, created using EdrawMax, highlights the rich cultural heritage and outstanding contributions of African Americans. It covers key areas such as STEM innovations, literature and thought, global influence of music and arts, and historical preservation. The document showcases influential figures and institutions that have played pivotal roles in shaping science, medicine, literature, and public memory, underscoring the integral role of African American contributions to society.

Basic regression algorithm for machine learning

- Key Timeline of African American History

This infographic, created using EdrawMax, outlines the pivotal moments in African American history from 1619 to the present. It highlights significant events such as emancipation, key civil rights legislation, and notable achievements that have shaped the social and political landscape. The timeline serves as a visual representation of the struggle for equality and justice, emphasizing the resilience and contributions of African Americans throughout history.

- Timeline of the Development of Voting Rights and Citizenship in the United State

This infographic, designed with EdrawMax, presents a detailed timeline of the evolution of voting rights and citizenship in the U.S. from 1870 to the present. It highlights key legislative milestones, court decisions, and societal changes that have expanded or challenged voting access. The timeline underscores the ongoing struggle for equality and the continuous efforts to secure voting rights for all citizens, reflecting the dynamic nature of democracy in America.

- African American Cultural Heritage and Excellence

This infographic, created using EdrawMax, highlights the rich cultural heritage and outstanding contributions of African Americans. It covers key areas such as STEM innovations, literature and thought, global influence of music and arts, and historical preservation. The document showcases influential figures and institutions that have played pivotal roles in shaping science, medicine, literature, and public memory, underscoring the integral role of African American contributions to society.

- Recommended to you

- Outline

machine learning Basic regression algorithm

regression learning

Features

supervised learning

Data set with label y

learning process

The process of determining model parameters w

predict or extrapolate

The process of calculating regression output by substituting new inputs

linear regression

basic linear regression

target linear function

Error Gaussian distribution assumption

There is a discrepancy between the output value and the labeled value

Assuming that the model output is the expected value, the probability function of the random variable (labeled value) yi is

Since the samples are independently and identically distributed, the joint probability density function of all labeled values is

Likelihood function to find optimal parameters (least squares LS solution)

log likelihood function

error sum of squares

maximum likelihood solution

Mean square error test formula

Recursive learning for linear regression

Targeted issues

The scale of the problem is too large and it is difficult to solve the matrix

gradient descent algorithm

Take all samples to calculate the average gradient

average gradient

Recursion formula

Stochastic gradient descent SGD algorithm (LMS)

Take random samples to calculate the gradient

stochastic gradient

Recursion formula

Mini-batch SGD algorithm

Take a small batch of samples to calculate the average gradient

average gradient

Recursion formula

regularized linear regression

Targeted issues

The condition number of the matrix is very large and the numerical stability is not good.

The nature of the large condition number of the problem

Some column vectors of a matrix are proportional or approximately proportional

There are redundant weight coefficients and overfitting occurs.

Solution

Should "reduce the number of model parameters" or "regularize the model parameters"

Regularized objective function

Error sum of squares J(w) hyperparameter λ constraining parameter vector w

form

Regularized least squares LS solution

Regularized Linear Regression Probability Interpretation

The prior distribution of the weight coefficient vector w is the Bayesian "maximum posterior probability estimate" MAP under the Gaussian distribution

Gradient recursion algorithm (small batch stochastic gradient descent method SGD as an example)

Multiple output (output vector y) linear regression

Targeted issues

The output is a vector y instead of a scalar y

Error sum of squares objective function J(W)

Least squares LS solution

Sparse Linear Regression Lasso

norm of the regularization term

Norm p>1

None of the solution coordinates are 0, and the solution is not sparse.

Norm p=1

Most of the solution coordinates are 0, the solutions are sparse, and the processing is relatively easy.

Norm p<1

Most of the solution coordinates are 0, the solutions are sparse, and the processing is difficult.

Lasso problem

content

For the problem of minimizing the error sum of squares function, a constraint ||w||1<t is imposed

regularization expression

Lasso’s cyclic coordinate descent algorithm

preprocessing

Zero-mean the data matrix X columns and normalize them to Z

Lasso's solution in single variable case

Lasso solution

Generalization of Lasso solution in multi-variable cases

Cyclic coordinate descent method CCD

First determine one of the parameters wj

Calculate the parameters that minimize the sum of squared errors

At this time, other parameters w are not optimal values, so the calculation result of wj is only an estimate.

Loop calculation

The same idea is used to calculate other parameters in a loop until the parameter estimates converge.

Part of the residual value ri(j) replaces yi

Mathematically consistent with univariate

parameter estimates

Lasso’s LAR algorithm

Be applicable

Solve the sparse regression problem under 1-norm constraints

Corresponding to the regularized regression problem

Classification

λ=0

Standard least squares problem

The larger λ

The sparser the model parameter solution w vector is, the sparser it is

linear basis function regression

basis function

regression model

data matrix

regression coefficient solution

singular value decomposition

pseudoinverse

SVD decomposition

Regression coefficient model solution

Error decomposition for regression learning

error function

error expectation

Model

theoretical best model

Learning model

error decomposition

Model complexity and error decomposition

The model is simple

Large deviation, small variance

The model is complex

Small deviation, large variance

Appropriate model complexity needs to be chosen